I recently had a project in which I needed to transfer a 60 GB SQLite database to SQL Server. After some research I found the sqlite3 and pyodbc modules, and set about scripting connections and insert statements.

The basic form of my script is to import the modules, setup the database connections, and iterate (via cursor) over the rows of the select statement creating insert statements and executing them.

The issue here is that this method results in single inserts being sent one at a time yielding less than satisfactory performance. Inserting 35m+ rows in this fashion takes ~5hrs on my system.

After some researching I found the general community suggesting solutions like the following:

- executemany

- fast_executemany

https://towardsdatascience.com/how-i-made-inserts-into-sql-server-100x-faster-with-pyodbc-5a0b5afdba5 - dumping data to csv files and using bulk insert

https://github.com/mkleehammer/pyodbc/issues/619 - using pandas and sqlalchemy

https://github.com/mkleehammer/pyodbc/issues/812

In addition, based on my prior DBA experience, my initial thought was to create a string with BEGIN TRANSACTION, concatenate a batch of INSERT statements, end the string with a COMMIT TRANSACTION, and finally pass the batches of transactions as strings to pyodbc.execute.

I did some testing, please find my results below.

For this experiment I used a production dataset consisting of a single SQLite table with 18,050,355 rows. The table has four columns with datatypes of integer, integer, text, and real. The real column was converted to an integer as it was improperly defined in the SQLite database and was validated to be an integer.

1 by 1 inserts (~80 minutes)

The 1 by 1 inserts were executed by performing inserts as such:

select_stmt="SELECT col0, col1,col2,CAST(col3 AS INT) FROM table"

for row in sqlite3Cursor.execute(select_stmt):

insert_stmt = f"INSERT INTO table(col0,col1,col2,col3) VALUES ({row[0]},{row[1]},'{row[2]}',{row[3]})"

pyodbcCursor.execute(insert_stmt)

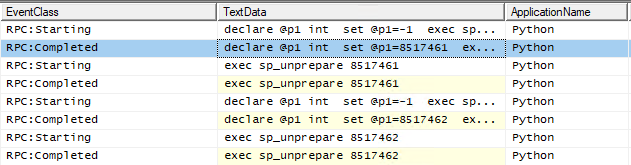

Using SQL Server Profiler I could see the 1 by 1 inserts happening:

executemany (~95 minutes)

fast_executemany (~75 minutes)

For this test I simply added a line of code:pyodbcCursor.fast_executemany = True

On the profiler side of things this looked exactly like executemany, with repetitive executions of single prepared statements.

This did run quite a bit faster then executemany, however it only ran 5 minutes faster than the 1 by 1 inserts.

SQL Server Transaction (~20 minutes)

https://docs.microsoft.com/en-us/sql/t-sql/language-elements/begin-transaction-transact-sql?view=sql-server-ver15

For the code modification on this test, I reverted back to a row by row cursor loop which builds the batches of 100 inserts per transaction. It looks like this:

counts = 0

select_stmt="SELECT col0, col1,col2,CAST(col3 AS INT) FROM table"

insert_stmt="BEGIN TRANSACTION \r\n"

for row in sqlite3Cursor.execute(select_stmt):

counts += 1

insert_stmt = f"INSERT INTO table(col0,col1,col2,col3) VALUES ({row[0]},{row[1]},'{row[2]}',{row[3]}) \r\n"

#The modulus is the batch size

if counts % 100 == 0:

insert_stmt+="COMMIT TRANSACTION"

pyodbcCursor.execute(insert_stmt)

pyodbcCursor.commit()

insert_stmt="BEGIN TRANSACTION \r\n"

#get the last batch

if insert_stmt!="BEGIN TRANSACTION \r\n":

insert_stmt+="COMMIT TRANSACTION"

pyodbcCursor.execute(insert_stmt)

pyodbcCursor.commit()

This also completes in 20 minutes which is 3 to 4 times faster than the next best test.

https://docs.microsoft.com/en-us/troubleshoot/sql/connect/fail-run-large-batch-sql-statements

TL;DR; I had to add SET NOCOUNT ON; in order to address this, however there was not a significant speed improvement with batch sizes larger than 400, for me.

Counter Log Review

Red is the SQL Server Transaction log drive (I’m in SIMPLE recovery mode).

Orange is the SQL Server Disk for data files.

Hi everyone, blogger stats is showing high interest in this blog post, so I thought I'd add that you can further increase performance through usage of multithreading:

ReplyDeletefrom multiprocessing.dummy import Pool as ThreadPool

How would you go by doing this? With the example above, would you have for example 5 workers and run each batch?

DeleteI used:

Deletefrom multiprocessing.dummy import Pool as ThreadPool

I then created a function to run the batched insert statements

Last, from '__main__' I called the function:

pool = ThreadPool(2)

results = pool.map([function], [variable])

pool.close()

pool.join()

In my case the variable was a list of table names and I sent in a list of about a dozen.

HTH

i can insert 42.000 rows in 2.5 minutos, the problem was if any row is not correct, all trasaccion is cancel. :(. my solve was . this rows send one by one. tnks for the code

DeleteHi, thanks a lot for posting this!

ReplyDeleteI've been struggling with inserting data using python into SQL Server and getting horrendous speeds. I've tried all the suggestions you link in the beginning and then some other simple/already written solutions. None has given any clear benefit. This post convinced me that I should follow in your footsteps and try that as the next thing.

Is there an error in "SQL Server Transaction" Code?

ReplyDeleteI don't see how the new insert_stmt values are being appended.

Should:

insert_stmt = f"INSERT INTO table(col0,col1,col2,col3) VALUES ({row[0]},{row[1]},'{row[2]}',{row[3]}) \r\n"

Be this?:

insert_stmt += f"INSERT INTO table(col0,col1,col2,col3) VALUES ({row[0]},{row[1]},'{row[2]}',{row[3]}) \r\n"

OR:

insert_stmt = f"{insert_stmt}INSERT INTO table(col0,col1,col2,col3) VALUES ({row[0]},{row[1]},'{row[2]}',{row[3]}) \r\n"

good catch, yes it should be insert_stmt += ...

ReplyDeleteThanks for your post. I am working on migrating data from sybase to sql server and this was very helpful

ReplyDeletethanks!

ReplyDeleteOne issue you may have is that autocommit wasn't set to false being running the fast_executemany test. Set this to false before you run it and should act as a bulk insert instead.

ReplyDeleteThank you so much! This has worked wonders. 21million rows copied in 10 minutes.

ReplyDelete